Can AI Ever Be Proven Conscious? A Philosopher Says Probably Not

Can AI Ever Be Proven Conscious? A Philosopher Says No

In Brief

- • A philosopher argues AI consciousness may never be testable or provable.

- • Consciousness alone wouldn’t imply sentience or moral concern.

- • Hype around “conscious AI” risks misleading ethics and priorities.

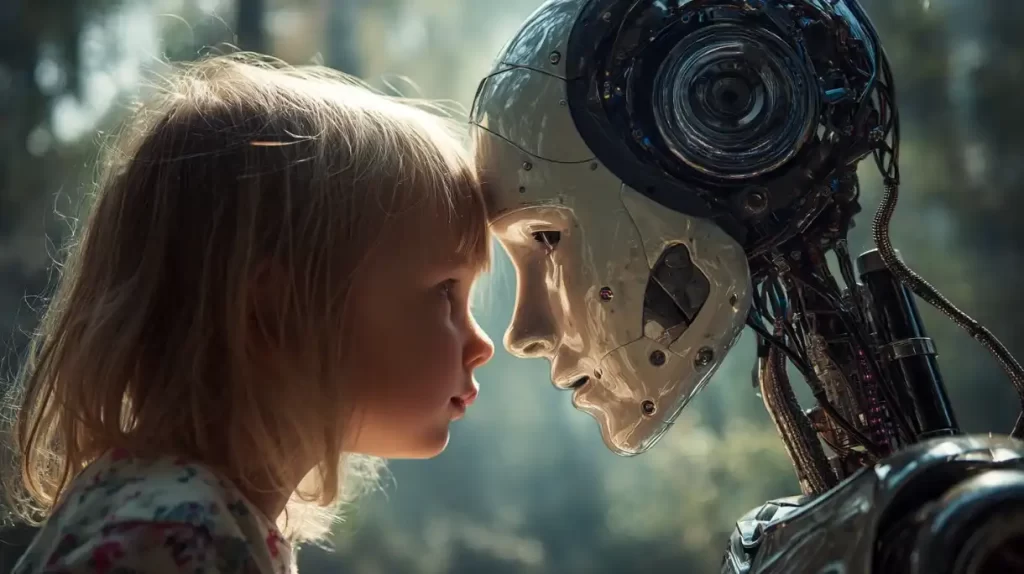

As artificial intelligence (AI) grows more sophisticated, a once-science-fiction question is quickly becoming an ethical one: what if AI becomes conscious? According to a philosopher at the University of Cambridge, the more troubling possibility is that we may never be able to tell.

Dr. Tom McClelland argues that our understanding of consciousness is so limited that any reliable test for AI consciousness is likely out of reach for the foreseeable future, and possibly even forever.

In a paper published in Mind and Language on December 18, McClelland suggests that the only defensible position on artificial consciousness is agnosticism.

As he explained:

“We do not have a deep explanation of consciousness. There is no evidence to suggest that consciousness can emerge with the right computational structure, or indeed that consciousness is essentially biological.”

Consciousness Is Not the Same as Sentience

A key distinction in McClelland’s argument is between consciousness and sentience. Consciousness, he explains, could involve perception or self-awareness without any emotional experience.

Sentience, by contrast, includes the capacity to feel pleasure or suffering, the feature that gives something genuine moral significance.

An AI system might one day perceive its environment or track goals in sophisticated ways without experiencing anything as good or bad. Even if such a system were technically conscious, McClelland argues, it would not automatically warrant ethical concern:

“Sentience involves conscious experiences that are good or bad, which is what makes an entity capable of suffering or enjoyment. This is when ethics kicks in. Even if we accidentally make conscious AI, it’s unlikely to be the kind of consciousness we need to worry about.”

Debater Build on Leaps of Faith

The debate over artificial consciousness often splits into two camps. Some researchers believe consciousness could emerge if the right computational structure is replicated, regardless of whether it runs on silicon or biology. Others argue that consciousness depends on specific biological processes and that AI could only ever simulate awareness.

McClelland critiques both sides, arguing that neither position is supported by meaningful evidence. We lack a deep explanation of consciousness itself, and there are no reliable indicators that could tell us whether an artificial system genuinely experiences anything at all.

Even common sense fails us here. Humans instinctively believe animals like cats are conscious, but that intuition evolved in a world without artificial life. According to McClelland, it simply does not apply to machines.

Risk of AI Consciousness Hype

McClelland warns that this uncertainty creates an opening for the tech industry to exploit the idea of artificial consciousness as a branding tool. Claims about ‘the next level of AI cleverness,” he argues, risk convincing the public that machines are sentient when there is no evidence they are.

That misconception carries ethical consequences. Emotional attachment to AI systems, believed to be conscious when they’re not, could distort moral priorities.

“If you have an emotional connection with something premised on it being conscious and it’s not, that has the potential to be existentially toxic.”

He points out that researchers still struggle to assess sentience in animals like prawns, which may be capable of suffering and are killed in vast numbers each year. Against that backdrop, focusing ethical concern on hypothetical conscious machines could represent a profound misallocation of attention and resources.

In McClelland’s view, until science undergoes a major intellectual revolution, the question of AI consciousness may remain permanently unresolved. And in that uncertainty, caution (not conviction) may be the most responsible stance.

More Must-Reads:

How do you rate this article?

Subscribe to our YouTube channel for crypto market insights and educational videos.

Join our Socials

Briefly, clearly and without noise – get the most important crypto news and market insights first.

Most Read Today

Peter Schiff Warns of a U.S. Dollar Collapse Far Worse Than 2008

2Dubai Insurance Launches Crypto Wallet for Premium Payments & Claims

3XRP Whales Buy The Dip While Price Goes Nowhere

4Samsung crushes Apple with over 700 million more smartphones shipped in a decade

5Luxury Meets Hash Power: This $40K Watch Actually Mines Bitcoin

Latest

Also read

Similar stories you might like.