Researchers Uncover Hidden Bias in Cancer AI

Researchers Uncover Hidden Bias in Cancer Diagnosis AI

In Brief

- • Cancer diagnosis AI shows accuracy gaps across demographic groups.

- • Models can infer patient traits from tissue images, creating hidden bias.

- • A new method sharply reduces bias without retraining from scratch.

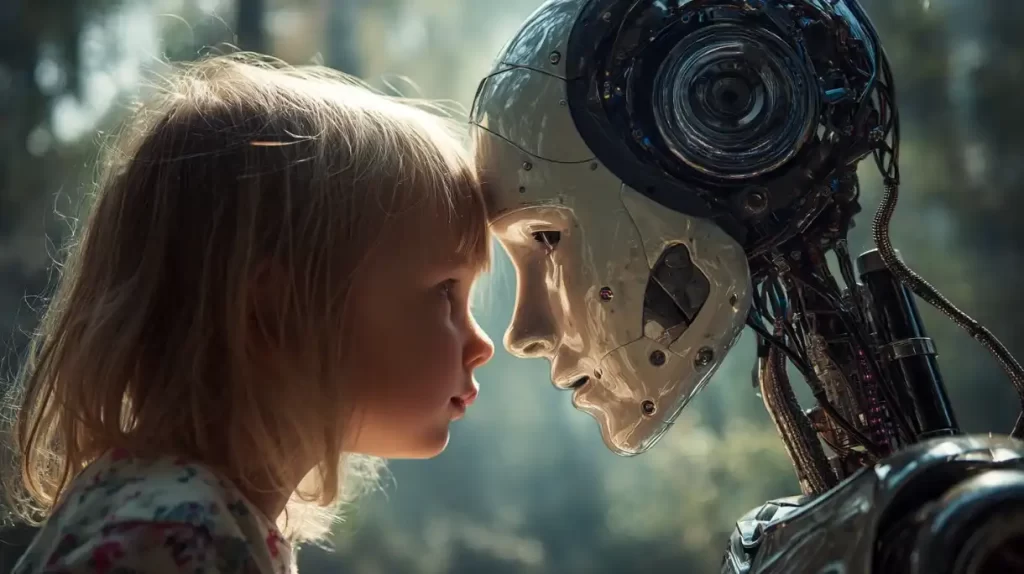

Artificial intelligence (AI) has become a powerful tool in cancer diagnosis, capable of scanning pathology slides with remarkable speed and accuracy, but a new study reveals a surprising and unsettling twist.

As it happens, cancer AI systems go beyond just detecting disease. They are also picking up on who the patient is, and that information can quietly influence their decisions.

Researchers at Harvard Medical School have found that widely used AI models for cancer pathology perform unevenly across different demographic groups, with diagnostic accuracy varying by race, gender, and age.

The findings, published on December 16 in Cell Reports Medicine, raise urgent questions about fairness, reliability, and trust in medical AI.

When AI Sees More Than Disease

Pathology has long been considered one of the most objective areas of medicine. A pathologist examines thin slices of tissue under a microscope, searching for visual cues that signal cancer type and stage. For humans, those slides reveal disease and not personal identity.

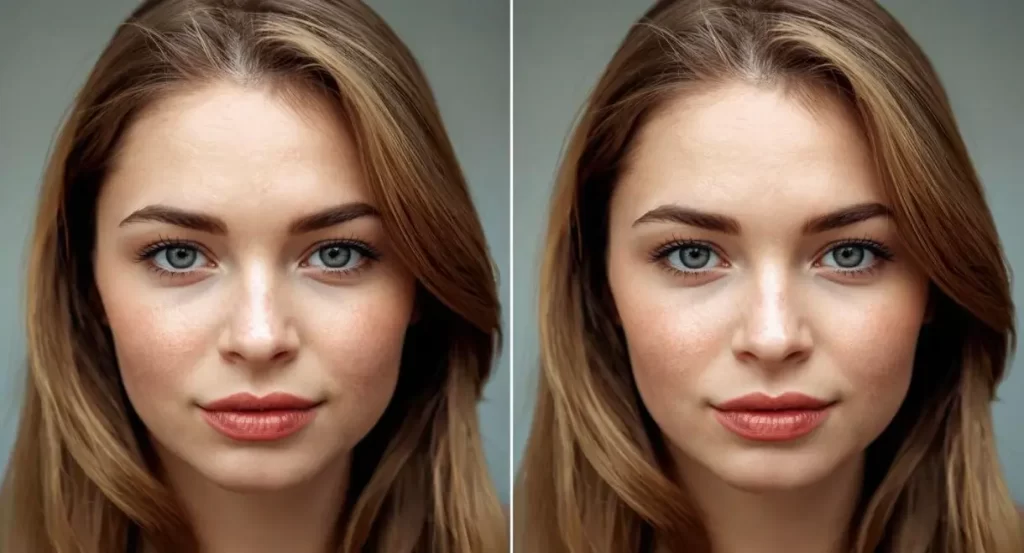

AI systems, however, see more. The Harvard-led team discovered that pathology AI models can infer demographic characteristics directly from tissue images, even though such information should be irrelevant to diagnosis. Once those signals are learned, the models may rely on them, intentionally or not, when making predictions.

According to senior author Kun-Hsing Yu of Harvard Medical School:

“Reading demographics from a pathology slide is thought of as a ‘mission impossible’ for a human pathologist, so the bias in pathology AI was a surprise to us.”

Where Bias Enters The System

To investigate the issue, researchers evaluated four commonly used pathology AI models across a large, multi-institutional dataset spanning 20 cancer types. In nearly one-third of diagnostic tasks, the models showed measurable performance gaps.

Some struggled to classify lung cancer subtypes in African American and male patients. Others showed reduced accuracy for breast cancer in younger patients or for renal, thyroid, and stomach cancers in specific demographic groups.

The team identified three main drivers behind these disparities. Uneven training data plays a role, as some populations are underrepresented in medical datasets. But the problem runs deeper.

Certain cancers occur more frequently in specific demographic groups, allowing AI systems to become disproportionately accurate for those populations while underperforming elsewhere.

The models also appear to latch onto subtle molecular signals, such as mutation patterns, that correlate with demographics rather than disease itself. Over time, these shortcuts can skew diagnoses.

Fixing Bias Without Rebuilding AI

Rather than abandoning pathology AI, the researchers focused on correcting it. They developed a framework called FAIR-Path, which teaches AI models to focus more on disease-specific features while suppressing attention to demographic cues.

When applied, diagnostic disparities dropped by roughly 88%, a dramatic improvement achieved without retraining models from scratch. The results suggest that fairness in medical AI is not out of reach, but it requires deliberate design choices and routine bias testing.

As AI becomes increasingly embedded in healthcare, the study serves as a reminder that accuracy alone is not enough. For medical AI to truly support patients and clinicians, it must work reliably for everyone, not just the majority.

More Must-Reads:

How do you rate this article?

Subscribe to our YouTube channel for crypto market insights and educational videos.

Join our Socials

Briefly, clearly and without noise – get the most important crypto news and market insights first.

Most Read Today

Peter Schiff Warns of a U.S. Dollar Collapse Far Worse Than 2008

2Samsung crushes Apple with over 700 million more smartphones shipped in a decade

3Dubai Insurance Launches Crypto Wallet for Premium Payments & Claims

4XRP Whales Buy The Dip While Price Goes Nowhere

5Luxury Meets Hash Power: This $40K Watch Actually Mines Bitcoin

Latest

Also read

Similar stories you might like.