AI Learns Math Better by Arguing With Itself

AI Learns Math Better by Arguing With Itself

In Brief

- • Letting multiple AI agents debate solutions significantly improves math accuracy.

- • Structured disagreement reduces hallucinations and logical errors.

- • The approach could make AI more reliable beyond math tasks.

Artificial intelligence (AI) models can sound confident while being completely wrong, especially when solving math problems. Now, researchers say the fix isn’t training bigger models, but when AI learns through arguing with each other.

A new framework developed by researchers at South China Agricultural University and Shanghai University of Finance and Economics shows that AI agents debating answers among themselves can dramatically improve accuracy and reduce hallucinations.

The study, published in the Journal of King Saud University Computer and Information Sciences, suggests that structured disagreement may be the key to more reliable artificial intelligence.

Instead of trusting a single model’s reasoning, the system forces multiple AI agents to challenge, refine, and ultimately agree on an answer, much like a panel of human experts.

Why AI Struggles With Math and Logic

Large language models excel at generating fluent text, but they often stumble on tasks that require strict logic, consistency, or multi-step reasoning. In math problems, these failures can appear subtle, with answers that look plausible but fall apart under scrutiny.

This weakness limits AI’s usefulness in education, research, and professional decision-making, where accuracy matters more than eloquence. The researchers set out to address this problem by rethinking how AI arrives at answers in the first place.

Letting AI Argue Instead of Guess

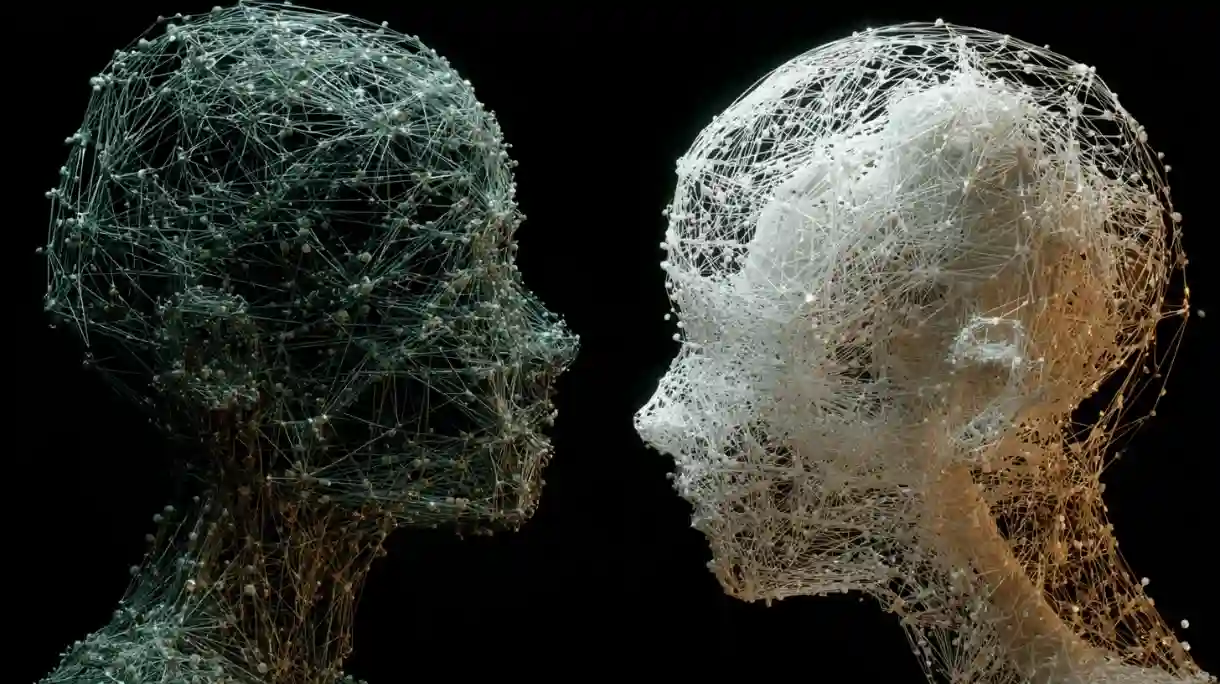

The new system, called Adaptive Heterogeneous Multi-Agent Debate (A-HMAD), assigns different AI agents distinct roles, such as logical reasoning, factual verification, or strategic planning.

Rather than voting blindly, the agents debate each step of the solution. A coordination mechanism determines which agent should speak at each stage, while a consensus optimizer weighs their arguments based on reliability and confidence.

The result is a better answer and a more transparent reasoning process. According to the researchers:

“On six challenging benchmarks – including arithmetic QA, grade-school math (GSM8K), multifact question answering (MMLU), factual biography generation, and chess strategy – our A-HMAD consistently outperforms prior single-model methods and the original multi-agent debate baseline.”

Results: Fewer Hallucinations, Better Answers

When tested across six challenging benchmarks, including grade-school math, multi-fact reasoning, biographies, and even chess strategy, the debate-based system consistently outperformed both single-model approaches and earlier debate frameworks.

Accuracy improved by up to 6%, while factual errors dropped by more than 30% in some tasks. Even more importantly, the system generalized well to new problems, which is a key requirement for real-world deployment. As the researchers explained:

“Notably, A-HMAD achieves 4–6% absolute accuracy gains over standard debate on these tasks, and reduces factual errors by over 30% in biography facts. We provide extensive ablations demonstrating the benefits of agent heterogeneity, additional debate rounds, and the learned consensus module.”

Why This Matters Beyond Math

The implications extend far beyond arithmetic. Reliable reasoning is essential for AI used in classrooms, scientific research, finance, law, and medicine. By mimicking how humans resolve disagreement, through structured debate rather than blind consensus, the system offers a blueprint for safer, more trustworthy AI. As the authors point out:

“Our findings suggest that an adaptive, role-diverse debating ensemble can drive significant advances in LLM-based educational reasoning, paving the way for safer, more interpretable, and pedagogically reliable AI systems.”

The researchers caution that more work is necessary before deploying such systems widely. Cultural context, conflicting values, and real-world complexity remain open challenges. Still, the findings point toward a future where AI systems don’t just sound smart, but reason more like humans do.

More Must-Reads:

How do you rate this article?

Subscribe to our YouTube channel for crypto market insights and educational videos.

Join our Socials

Briefly, clearly and without noise – get the most important crypto news and market insights first.

Most Read Today

Peter Schiff Warns of a U.S. Dollar Collapse Far Worse Than 2008

2Samsung crushes Apple with over 700 million more smartphones shipped in a decade

3Dubai Insurance Launches Crypto Wallet for Premium Payments & Claims

4XRP Whales Buy The Dip While Price Goes Nowhere

5Luxury Meets Hash Power: This $40K Watch Actually Mines Bitcoin

Latest

Also read

Similar stories you might like.